← Explore

Panel

The Question of Autonomy and Human Intention in Art and AI

Speakers:

Isabella Salas, Yuri Suzuki, Maya Indira Ganesh, Ali Nikrang

Profile:

Isabella Salas

Born and raised in Mexico City, Isabella Salas’ interdisciplinary artworks use artificial intelligence, video, synthesized sound, video projection as medium to create multisensorial digital experiences based on neuroaesthetics design. Her work has been presented in digital art institutions including the Societe des Arts et Technologies, MUTEK AI Lab, Gamma XR Lab, TransArt Festival.

Profile:

Maya Indira Ganesh

Maya Indira Ganesh is a tech and digital cultures theorist. Her dissertation research examined the political-economic, social, and cultural dimensions of how the ‘ethical’ is being shaped in data-field worlds, rich in histories and imaginaries, of AI and autonomous technologies. Prior to this, she worked at the intersection of gender justice, digital activism, and international development.

Soundbite:

“What I am interested in is how the technology becomes seductive in enhancing and augmenting what humans already do. Perhaps there is more that is opaque or inaccessible about the way that AI technologies are architected, further up the chain. But things appear to the user as fairly remarkable, overwhelming even, in what the tool is able to do or generate.”

Maya Indira Ganesh, reminding us not to be blinded by novelty or (seeming) complexity

Takeaway:

Because we can’t define creativity precisely, attempts to categorize algorithmically produced work as ‘creative’ or ‘not creative’ are futile. The discussants all sidestepped a question to this effect and they instead seemed more interested in clarifying how AI helped their process than judging the authenticity of what (or how) their machines produced.

Takeaway:

Intentionality is just as thorny as ‘creativity,’ and using that as a lens for analyzing automation prompts a few interesting questions. First, to what degree can an artist or creator erase themselves from a process they set in motion? Second, how have automation’s failures—glitches, crashes—impacted our aesthetics?

Profile:

Yuri Suzuki

Sound artist Yuri Suzuki works in installation and instrument design and is best known for the synth he designed for Jeff Mills (2015) and his reimagination of the Electronium for the Barbican (2019). More recently, London-based Suzuki became a partner at the international design studio Pentagram, where he has worked on branding projects for clients including Roland and the MIDI association.

Profile:

Ali Nikrang

Ali Nikrang is a key researcher and artist at the Ars Electronica Futurelab in Linz, Austria. His background is in both technology and art, and his research centers around the interaction between human and AI systems for creative tasks, with a focus on music. He is the creator of the software Ricercar, an AI-based collaborative music composition system for classical music.

Soundbite:

“I notice that some artists that use AI are looking for moments when the algorithm intervenes, like a glitch, the machine showing itself as kind of an aesthetic. So there is something to be said about the actual process of selecting what gets into a final work.”

Yuri Suzuki, on using AI versus picking things that sound machinic

Soundbite:

“One question that is coming is ‘do we have to pay royalties?” for art created by machine learning trained on existing art protected under copyright.”

Yuri Suzuki, on the lawsuits on the horizon

Reference:

Focused on the daunting question “can machines think?,” Ars Electronica Futurelab’s Interaction and Collaboration in AI-based Creative and Artistic Applications research group focuses squarely on AI co-creation. Their portfolio of projects includes the AI-based Musical Companion Ricecar, an AI intervention that speculatively completed Gustav Mahler’s unfinished Tenth Symphony, research into AI instrument antecedents, and ruminations on how machines perceive music.

Soundbite:

“When I’m communicating with AI, I feel like I’m talking to a two-year-old, it’s sleepy, and cries, and just does what it wants. You cannot expect to do exactly what you tell it, and you have to learn to communicate with it.”

Isabella Salas, de-romanticizing machine intelligence

Soundbite:

“One artist that I think is doing very interesting work is Nora Al-Badri, who is a German-Iraqi artist using Generative Adversarial Networks to do speculative archaeology, to look at artifacts and antiquities that have been lost, stolen, or are missing from Iraq. This tells you more about the state of that archive than about AI itself.”

Maya Indira Ganesh, on using AI to catalyze cultural and political conversations versus treating it as an end unto itself

Reference:

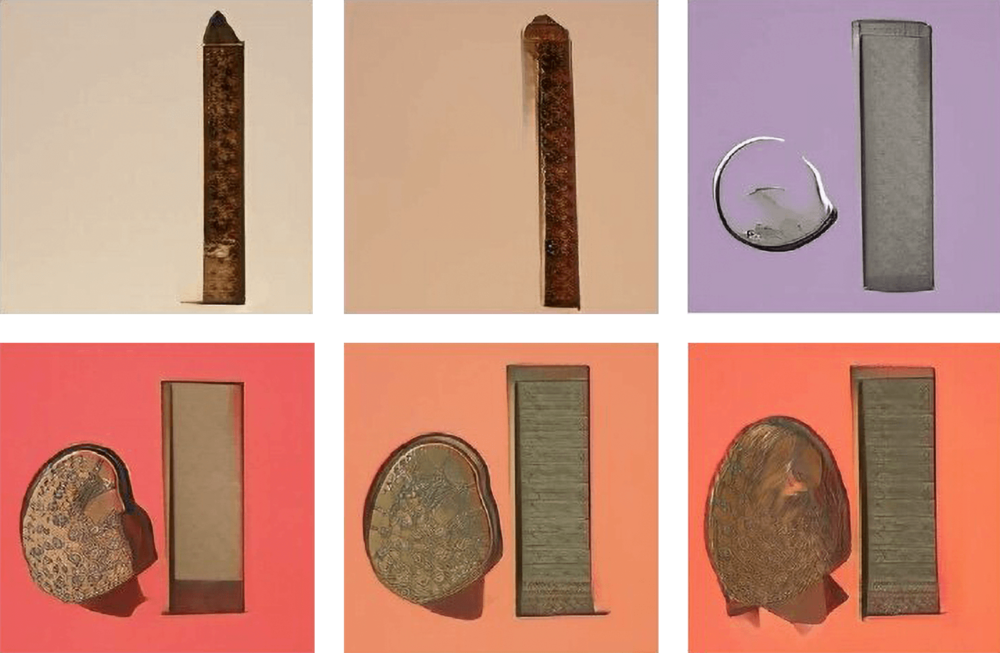

Namechecked by Maya Indira Ganesh as compelling case study for thinking about AI and culture, Nora Al-Badri’s Babylonian Vision (2020) uses General Adversarial Networks to create an image of a composite Mesopotamian, Neo-Sumerian, and Assyrian artifact based on a training set of 10,000 photographs from major museum collections. At times using public APIs but “often crawling and scraping without the permission of the institution,” the work creates new synthetic images based on open (and purloined) cultural data. The work of speculative archaeology was shown in the Inga Seidler-curated exhibition “Possessed” at Kunsthalle Osnabrück.

Soundbite:

“I’m always looking for where the shadow human is in the loop.”

Maya Indira Ganesh, reminding us there is always a (hu)man behind the curtain that we should pay attention to

Soundbite:

“The language is so laden with mythology and imaginaries. Even this idea of autonomy is understood as a fetishized isolation. Just look around, nothing works on its own—everything is radically interconnected.”

Maya Indira Ganesh, wondering why we always default to ‘intention’ and ‘autonomy’ when discussing AI

Resources:

Commentary: