1,571 days, 2,407 entries ...

Newsticker, link list, time machine: HOLO.mg/stream logs emerging trajectories in art, science, technology, and culture––every day

To illuminate how generative AI models like Midjourney and Stable Diffusion derive their worldview from its 5.8 billion image and text pairs, German data journalist Christo Buschek and Canadian software artist Jer Thorp deconstruct the (only) open-source foundation dataset LAION-5B in an incisive, visual essay. Digging deep into its troubled contents, algorithmic—not human—curation, and entanglements with other systems, the two warn about stacking “models on top of models, and trainings sets on top of training sets.”

“Due to the high plasticity and adaptability of organoids, Brainoware has the flexibility to change and reorganize in response to electrical stimulation, highlighting its ability for adaptive reservoir computing.”

Anicka Yi’s solo exhibition “A Shimmer Through The Quantum Foam” opens at Esther Schipper, Berlin, evolving the Korean-American artist’s notion of the “biologized machine” with new works. Visitors enter a hybrid ecosystem of fleshy landscapes created with machine learning models and suspended luminescent pods resembling Radiolaria. As the soft glow of an aqueous ooze—indicative of life’s marine origins—sprawls across the gallery floor, a custom-made scent by perfumer Barnabé Fillion fills the air.

American software artist Casey Reas returns to Berlin’s DAM Projects with “Conjured Terrain,” a solo exhibition of new Untitled Film Stills and Compressed Cinema digital video works set to (and driven by) the electroacoustic soundscapes of German artist and composer Jan St. Werner. Building on a body of images ‘conjured’ from feature films fed to generative adversarial networks (GANs) in 2018, Reas revisits—and celebrates—the raw visual grammar of early machine learning experiments from that era.

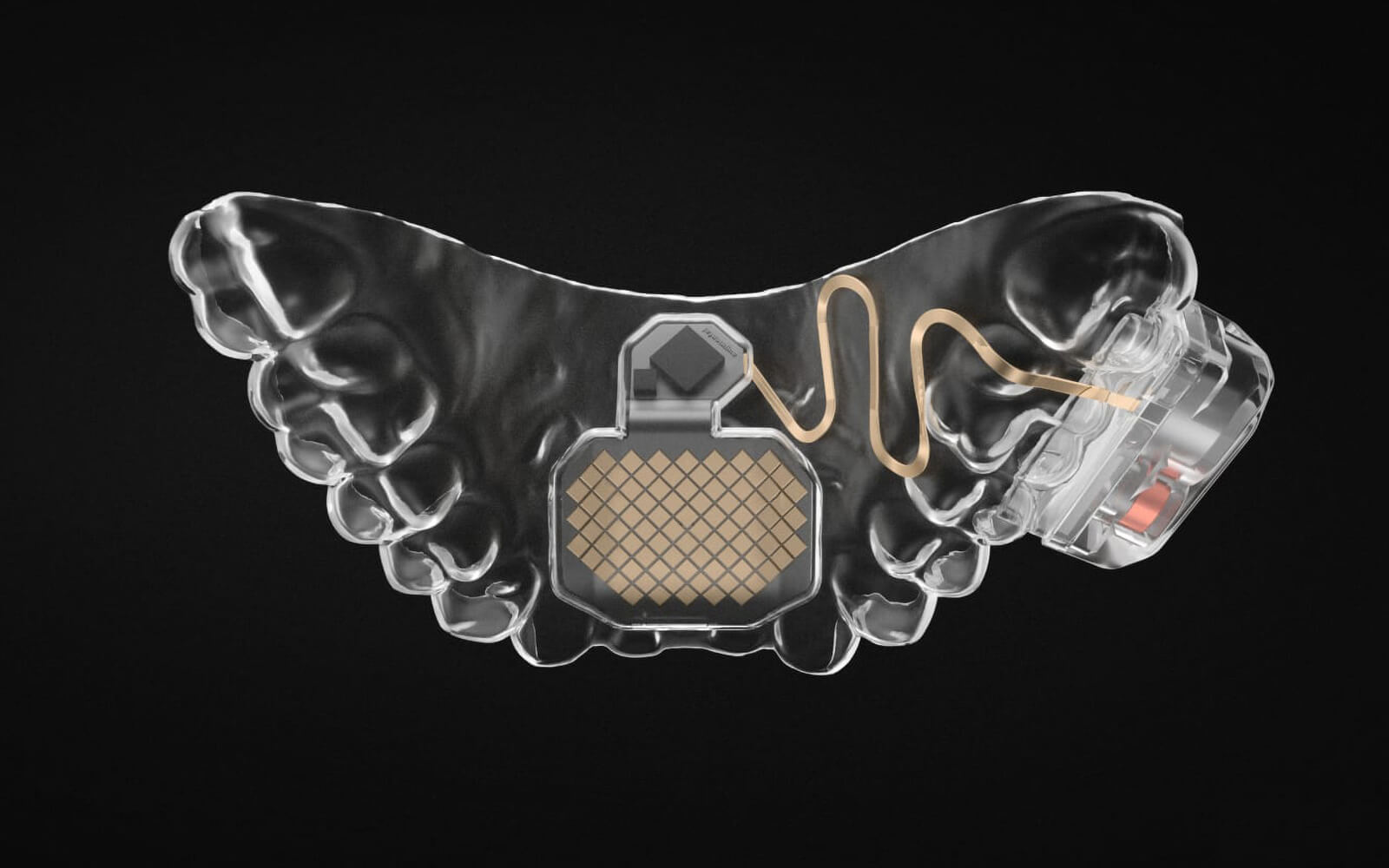

MouthPad^, a smart oral splint that enables hands-free human-computer interaction using tongue, jaw, head, and breathing gestures, wins the grand prize in Innovation: Early Stage Technology at Cannes Lions International Festival of Creativity. Developed by MIT Media Lab spinoff Augmental, the forthcoming machine learning-powered ‘tongue trackpad’ gives users (not just those lacking mobility) full reign over their gadgets—to send emails, edit photos, or play videogames—while being invisible to others.

“Regulation should avoid endorsing narrow methods of evaluation and scrutiny for GPAI that could result in a superficial checkbox exercise. This is an active and hotly contested area of research and should be subject to wide consultation, including with civil society, researchers and other non-industry participants.”

“We conclude that LLMs such as GPTs exhibit traits of general-purpose technologies, indicating that they could have considerable economic, social, and policy implications.”

“Just as quarantining helped slow the spread of the virus and prevent a sharp spike in cases that could have overwhelmed hospitals’ capacity, investing more in safety would slow the development of AI and prevent a sharp spike in progress that could overwhelm society’s capacity to adapt.”

“Without novel human artworks to populate new datasets, AI systems will, over time, lose touch with a kind of ground truth. Might the next version of DALL-E be forced to cannibalize its predecessor?”

“Neurography [is] the process of framing and capturing images in latent spaces. The Neurographer controls locations, subjects and parameters.”

“No, these renderings do not relate to reality. They relate to the totality of crap online. So that’s basically their field of reference, right? Just scrape everything online and that’s your new reality.”

“In the absence of a capacity to reason from moral principles, ChatGPT was crudely restricted by its programmers from contributing anything novel to controversial—that is, important—discussions. It sacrificed creativity for a kind of amorality.”

“We are thrilled to announce that our campaign to gather artist opt outs has resulted in 78 million artworks being opted out of AI training.”

“It seems that forcing a neural network to ‘squeeze’ its thinking through a bottleneck of just a few neurons can improve the quality of the output. Why? We don’t really know. It just does.”

“Even though © doesn’t provide for any protection against biometric use, it does prohibit the redistribution of the image file. CC allows it. Ideal for packaging files into datasets.”

K Allado-McDowell

Air Age Blueprint

Full of playful examples—statistically modelling dropping cannonballs from different heights, a neural net theory of cat recognition—Stephen Wolfram breaks down how ChatGPT works. Working from the simple claim “it’s just adding one word at a time,” the computer scientist describes how neural nets are trained to model ‘human-like’ tasks in 3D space, how they tokenize language, and concludes with a rumination on semantic grammar that recognizes the language model’s successes (and limits).

“It’s more of a bullshitter than the most egregious egoist you’ll ever meet, producing baseless assertions with unfailing confidence because that’s what it’s designed to do.”

The procedurally generated Seinfeld spoof Nothing, Forever is temporarily banned on Twitch after lead character Larry Feinberg made transphobic remarks. The show’s developers blame switching from OpenAI’s GPT-3 Davinci model to its predecessor, Curie, after the former caused outages. “We leverage OpenAI’s content moderation tools, and will not be using Curie as a fallback in the future,” they state on Discord. Launched in December 2022, the show became a viral hit for its nonsensical humour, nondescript style, and audience activity.

Daily discoveries at the nexus of art, science, technology, and culture: Get full access by becoming a HOLO Reader!

- Perspective: research, long-form analysis, and critical commentary

- Encounters: in-depth artist profiles and studio visits of pioneers and key innovators

- Stream: a timeline and news archive with 1,200+ entries and counting

- Edition: HOLO’s annual collector’s edition that captures the calendar year in print