1,576 days, 2,409 entries ...

Newsticker, link list, time machine: HOLO.mg/stream logs emerging trajectories in art, science, technology, and culture––every day

“If your full-time, eight-hours-a-day, five-days-a-week job were to look at each image in the dataset for just one second, it would take you 781 years.”

To illuminate how generative AI models like Midjourney and Stable Diffusion derive their worldview from its 5.8 billion image and text pairs, German data journalist Christo Buschek and Canadian software artist Jer Thorp deconstruct the (only) open-source foundation dataset LAION-5B in an incisive, visual essay. Digging deep into its troubled contents, algorithmic—not human—curation, and entanglements with other systems, the two warn about stacking “models on top of models, and trainings sets on top of training sets.”

“It’s more than Apple and Microsoft’s market caps combined. It’s more than than any company has raised for anything in the history of capitalism.”

“A world of sanitized, corporate AI is probably better than one with millions of unhinged chatbots running amok. But I find it all a bit sad. We created an alien form of intelligence and immediately put it to work … making PowerPoints?”

“Academics call it the ‘liar’s dividend.’ It means that, because there is so much falseness in the world, it becomes really easy for bad actors to call ‘deepfake!’ on everything.”

“Copyright only works above a certain threshold of importance. That’s something you learn as an artist. Your voice doesn’t matter.”

“Silicon Valley runs on VC hype. VCs require hype to get a return on investment because they need an IPO or an acquisition. You don’t get rich by the technology working, you get rich by people believing it works long enough that one those two things gets you some money.”

Time magazine identifies the 100 people that drive the current AI boom and the conversations around it in a special issue. In addition to staple industry names like OpenAI’s Sam Altman, Anthropic’s Dario and Daniela Amodei, and former Google CEO Eric Schmidt, Time 100 AI also highlights the work of AI researchers Kate Crawford, Timnit Gebru, and Meredith Whittaker, and artists Stephanie Dinkins, Sougwen Chun, and Holly Herndon, who “grapple with profound ethical questions” and try to use AI “to address social challenges.”

“How do we prevent these language models from scraping our archives? But if they are going to scrape our archives, how do we at least make sure that we’re getting paid for that?”

“People should know that it isn’t just Meta—at every social media firm there are workers who have been brutalized and exploited. But today I feel bold, seeing so many of us resolve to make change. The companies should listen—but if they won’t, we’ll make them.”

“We conclude that LLMs such as GPTs exhibit traits of general-purpose technologies, indicating that they could have considerable economic, social, and policy implications.”

“The breakneck deployment of half-baked AI, and its unthinking adoption by a load of credulous writers, means that Google—where, admittedly, I’ve found the quality of search results to be steadily deteriorating for years—is no longer a reliable starting point for research.”

“In the absence of a capacity to reason from moral principles, ChatGPT was crudely restricted by its programmers from contributing anything novel to controversial—that is, important—discussions. It sacrificed creativity for a kind of amorality.”

“It is easy now to imagine a setup wherein machines could prompt other machines to put out text ad infinitum, flooding the internet with synthetic text devoid of human agency or intent: gray goo, but for the written word.”

“What we actually saw was a preview of what future products will look like. A lot of hype, a lot of misstatements, and an exploitation of people’s lack of knowledge about what cognition is and what artificial systems can do.”

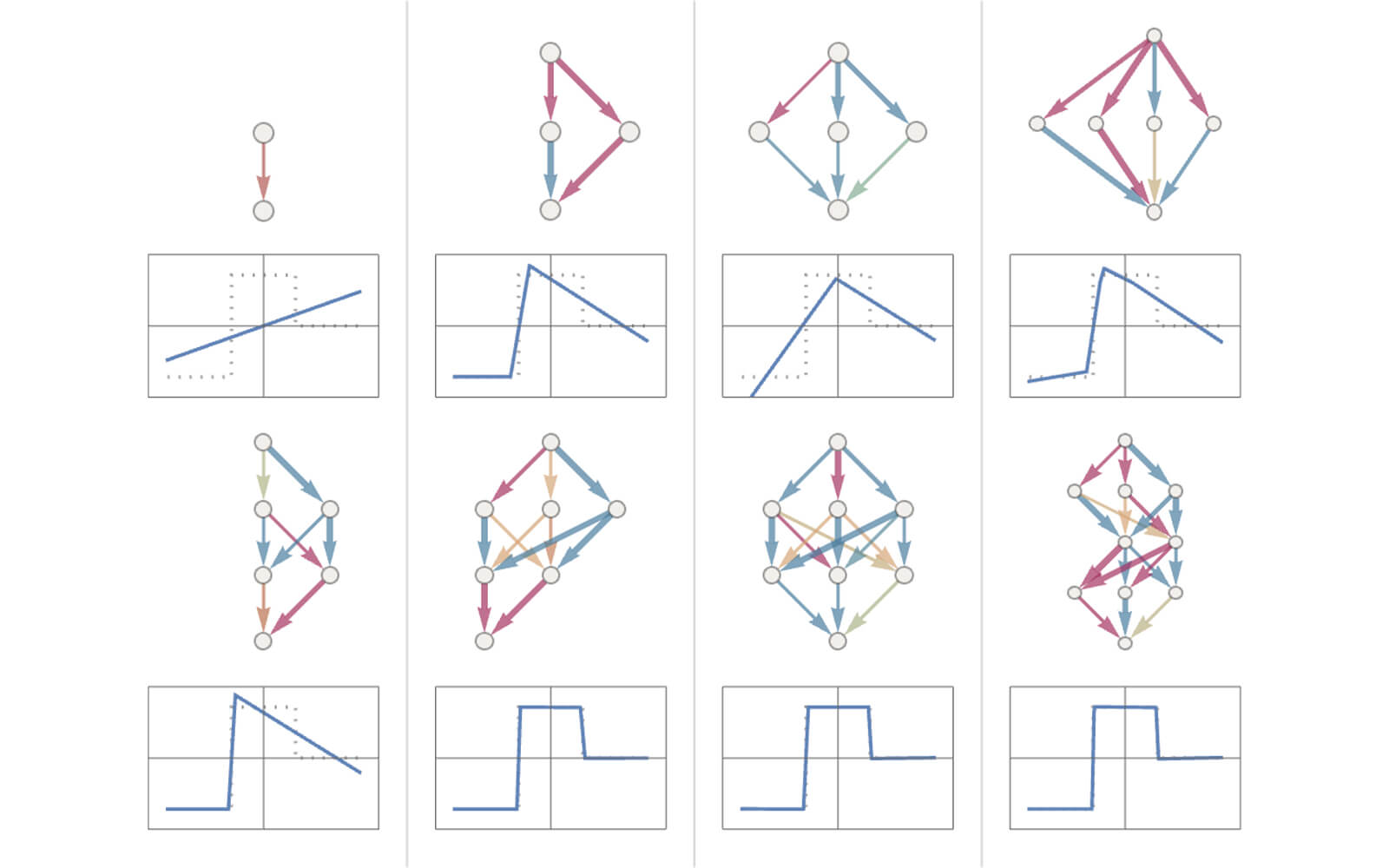

Full of playful examples—statistically modelling dropping cannonballs from different heights, a neural net theory of cat recognition—Stephen Wolfram breaks down how ChatGPT works. Working from the simple claim “it’s just adding one word at a time,” the computer scientist describes how neural nets are trained to model ‘human-like’ tasks in 3D space, how they tokenize language, and concludes with a rumination on semantic grammar that recognizes the language model’s successes (and limits).

“It’s more of a bullshitter than the most egregious egoist you’ll ever meet, producing baseless assertions with unfailing confidence because that’s what it’s designed to do.”

The procedurally generated Seinfeld spoof Nothing, Forever is temporarily banned on Twitch after lead character Larry Feinberg made transphobic remarks. The show’s developers blame switching from OpenAI’s GPT-3 Davinci model to its predecessor, Curie, after the former caused outages. “We leverage OpenAI’s content moderation tools, and will not be using Curie as a fallback in the future,” they state on Discord. Launched in December 2022, the show became a viral hit for its nonsensical humour, nondescript style, and audience activity.

“Being critical of extractive and exploitative technology is optimism. Saying that new tech shouldn’t happen at the expense of the vulnerable is an optimistic belief. Those who perpetuate the myth that criticism is anti-tech are the cynics.”

Daily discoveries at the nexus of art, science, technology, and culture: Get full access by becoming a HOLO Reader!

- Perspective: research, long-form analysis, and critical commentary

- Encounters: in-depth artist profiles and studio visits of pioneers and key innovators

- Stream: a timeline and news archive with 1,200+ entries and counting

- Edition: HOLO’s annual collector’s edition that captures the calendar year in print