Exhibitions, Research, Criticism, Commentary

A chronology of 3,585 references across art, science, technology, and culture

Tired of all the slop? Tega Brain’s browser extension Slop Evader (2025) uses the Google search API to only return content published before Nov 30, 2022—the day OpenAI unleashed ChatGPT onto the world. “Sure, the info is a couple of years old, but at least you know a human wrote it,” the New-York-based artist quips on Instagram. Slop Evader is available to download for Chrome and Firefox.

“LLM users consistently underperformed at neural, linguistic, and behavioural levels. These results raise concerns about the long-term educational implications of LLM reliance and underscore the need for deeper inquiry into AI’s role in learning.”

“It is thus necessary to broaden the policy ambit to capture the greater concern of humanity—not just whether opportunities and credit belong to artists, but whether artistic endeavors themselves belong to human beings.”

“Transparency in AI is dying: No evaluations, no release notes, just vibes and more bad naming. This is really OpenAI embracing the product arc.”

Circular shapes, central openings, radiating lines: Radek Sienkiewicz, aka VelvetShark, has an idea why AI company logos resemble buttholes. “Circles represent wholeness, completion, and infinity—concepts that align with AI’s promise. They’re also friendly and non-threatening, qualities companies desperately want to project when selling potentially job-replacing technology.” The visual conformity reveals a race for legitimacy, Sienkiewicz concludes, and ”the fear of standing out.”

“That’s using Studio Ghibli’s branding, name, work, and reputation to promote OpenAI products. It’s an insult. It’s exploitation.”

“The temptation to use A.I. as a shortcut is a symptom of a culture that has so devalued both writing and reading that it seems to some of my students like a rational choice to opt out of both.”

“If chatbots can be persuaded to change their answers by a paragraph of white text, or a secret message written in code, why would we trust them with any task, let alone ones with actual stakes?”

“This is Apple’s pitch distilled: the messy edges of your life, sanded down via Siri and brushed aluminum. You live; Apple expedites.”

“While politicians spent millions harnessing the power of social media to shape elections during the 2010s, generative AI effectively reduces the cost of producing empty and misleading information to zero.”

“Silicon Valley runs on VC hype. VCs require hype to get a return on investment because they need an IPO or an acquisition. You don’t get rich by the technology working, you get rich by people believing it works long enough that one those two things gets you some money.”

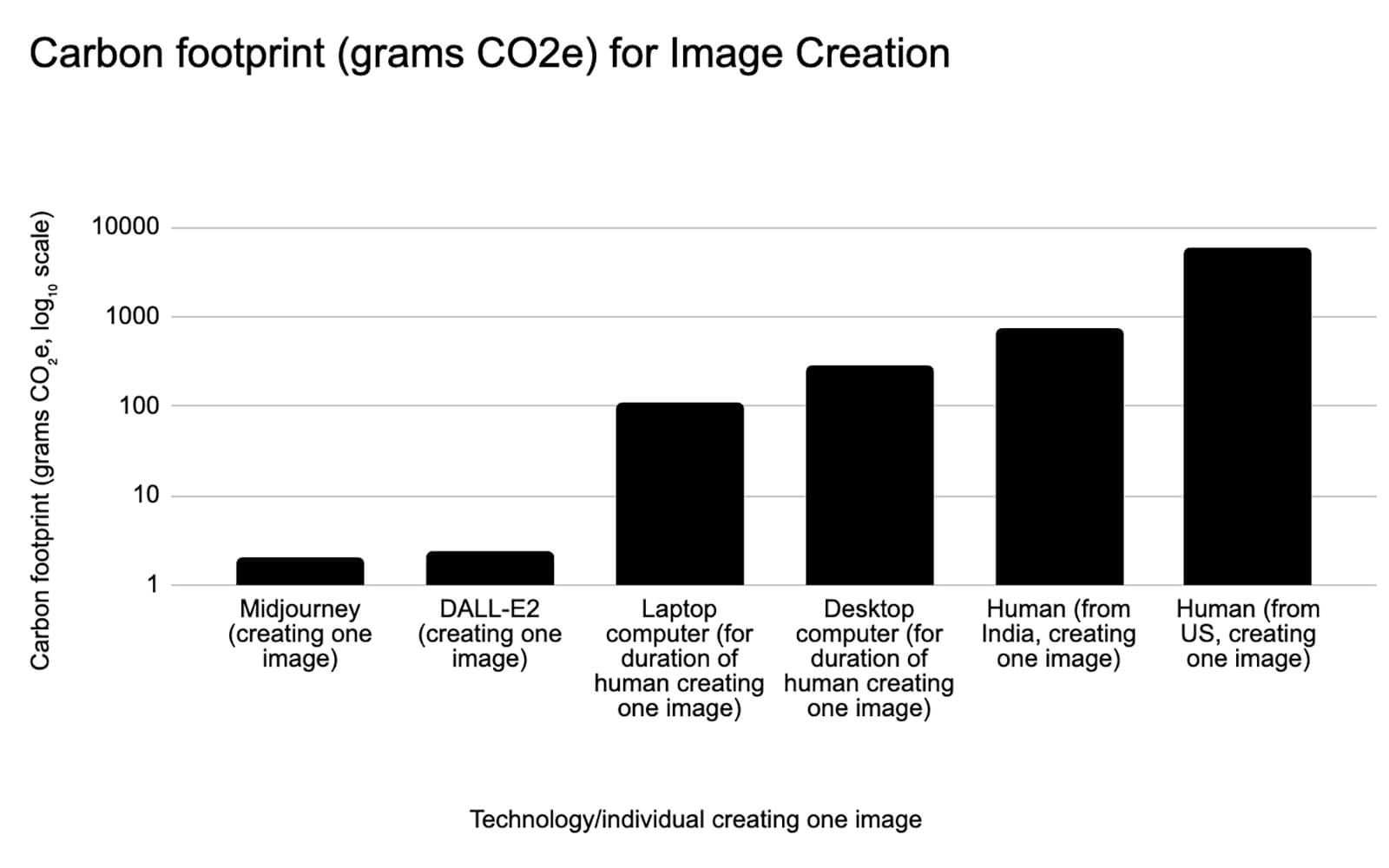

How does generative AI’s carbon footprint fare against human creators? Pretty well, according to a recent paper shared by American software artist Kyle McDonald. Comparing text and image creation energy use, University of California researcher Bill Tomlinson and team found that BLOOM, ChatGPT, Midjourney, and DALL-E2 beat human writers and illustrators (and their computers) by wide margins: “An AI creating an image emits 310 to 2900 times less CO2,” states the paper. McDonald’s dark take: “New eugenics just dropped.”

“Art doesn’t play fetch with approval. It chews the slippers of convention and relishes in the surprise of its own bark.”

“As the white-collar workforce gets more and more automated, there’s gonna be a shift back to the office where people can prove to their co-workers that they’re in fact a human, not three ChatGPTs in a trenchcoat.”

“I’m so grateful that the AI revolution came along if for no other reason than that it showed us what it looks like when consumers actually get excited about something. It truly revealed that the crypto story was about 98% hype.”

German AI artist Mario Klingemann releases A.I.C.C.A., short for Artificially Intelligent Critical Canine (2023), into the current exhibition of Madrid’s Colección SOLO. Equipped with a camera, thermal printer, and ChatGPT, the furry AI art critic on wheels is designed to roam galleries and offer analysis—from its butt. The performative sculpture pokes fun at punditry but isn’t cynical, Klingemann assures. “Art critics play a very important role. The worst thing that can happen to an artist is to be ignored.”

A show parsing post large-language model (LLM) “shared discourse,” Sarah Rothberg’s “SUPERPROMPT” opens at Bitforms San Francisco. Through several performances, the American artist takes aim at the veracity of statements made by ChatGPT and its ilk, as well as virtualized social convention. In NEW MEETINGS (2021-, image), for example, Rothberg and mystery guests convene in VR to engage in a conversational game that demonstrates “how architecture and social arrangements distribute power.”

“ChatGPT is an advertisement for Microsoft. It’s an advertisement for studio heads, the military, and others who might want to actually license this technology via Microsoft’s cloud services.”

Daily discoveries at the nexus of art, science, technology, and culture: Get full access by becoming a HOLO Supporter!

- Perspective: research, long-form analysis, and critical commentary

- Encounters: in-depth artist profiles and studio visits of pioneers and key innovators

- Stream: a timeline and news archive with 3,100+ entries and counting

- Edition: HOLO’s annual collector’s edition that captures the calendar year in print