Exhibitions, Research, Criticism, Commentary

A chronology of 3,585 references across art, science, technology, and culture

“Why must everything, even death, be subjected to the bureaucratic rationality that has swallowed the horizon of what’s possible with computation?”

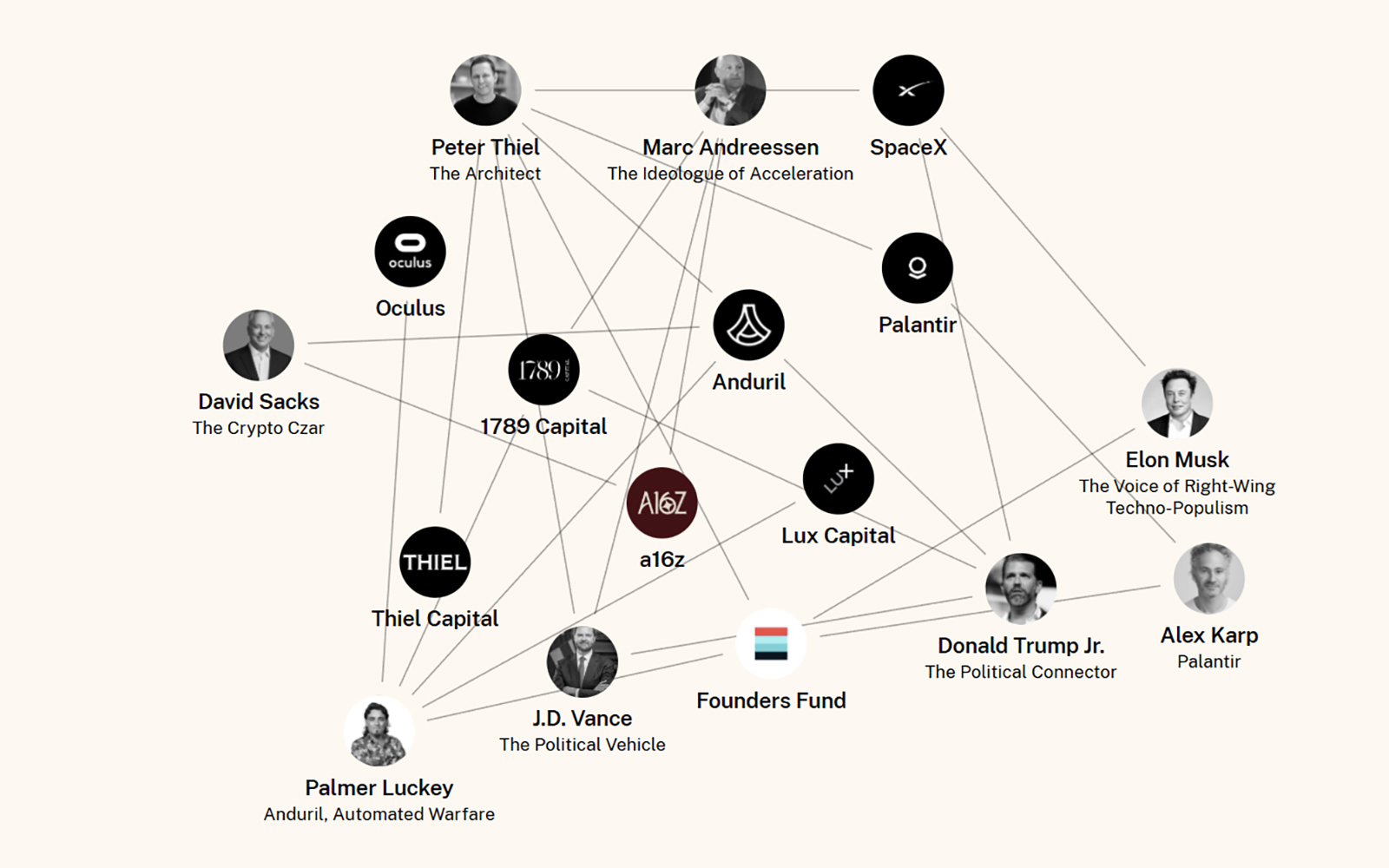

Francesca Bria and XOF Research launch The Authoritarian Stack, an interactive data visualization tracking Silicon Valley’s ascendant “patriotic tech” coalition. Drawing on an open-source dataset of 250+ actors (Alexander Karp, Palmer Luckey, Peter Thiel, etc.) and $45 billion in financial flows, the research traces how cloud platforms, AI, and defense tech, are converging into privatized infrastructure around core state functions—a system where “corporate boards, not public law, set the rules.”

“There is no path to profitability for subprime AI. These absurd data centers will stand sentinel over the ruins of our fake economy like moai on Easter Island.”

“There are forms of magical thinking that I think is a part of the popular imagination around AGI. It connects really well to the kinds of religious imaginaries that you see in conspiracy thinking today.”

“Under the guise of technological inevitability, companies are using the AI boom to rewrite the social contract—laying off employees, rehiring them at lower wages, intensifying workloads, and normalizing precarity.”

“Where Silicon Valley imagines itself through Middle-earth, Mars, and cyberspace, China’s tech world thinks simultaneously in terms of the jianghu.”

“Consent is an ongoing, enthusiastic social contract that is mutable. You can agree to something, experience it, and then decide you don’t actually like it, and then you change the terms. But all of this needs to be in discussion in perpetuity.”

“If you were hoping for Al Pacino chewing scenery, this might be a bit of a letdown.”

“The multiday treatment program at NOX, with its careful progression through diagnostic spaces, doesn’t cure a car of contemplation but manages it, containing affect within acceptable parameters.”

Cory Doctorow

Enshittification

“Suzanne Treister: Prophetic Dreaming” at Modern Art Oxford (UK) traces the British para-disciplinary artist’s enduring fascination with new technologies, power structures, and alternative belief systems. Treister’s first major institutional retrospective spans four decades and includes key works like the HEXEN 2.0 series of alchemical diagrams of big picture histories, with the latest, HEXEN 5.0 (2023-25), linking AI, climate breakdown, and quantum computing.

In “Stuck? Click Here,” an Aksioma online exhibition via KUNSTSURFER, Dutch artist Lotte Louise de Jong replaces the web ads in your browser with slowed down “stuck” clips, a niche fetish genre where female bodies are wedged into sofas, office chairs or washing machines. A metaphor for our entrapment in digital platforms, de Jong’s intervention “reminds us that ‘stuck’ is no longer just a fetish but a default mode of existence online,” writes curator Hsiang-Yun Huang.

Suzanne Treister

Prophetic Dreaming

“The end game is just-in-time per-user generated content, leaving everyone hyper addicted and isolated with little to no shared cultural references between us.”

“AI is the asbestos we are shovelling into the walls of our society, and our descendants will be digging it out for generations.”

“The goal is not to react after a major incident occurs… but to prevent large-scale, potentially irreversible risks before they happen. If nations cannot yet agree on what they want to do with AI, they must at least agree on what AI must never do.”

“We went from MONDO 2000 being the main magazine of the internet—a weird, psychedelic, hypertext universe, Gen X free-for-all—to WIRED, which was saying ‘you can make money,’ and ‘you can invest in the future.’ Once people are betting on the future they don’t want infinite possibility anymore.”

“Each search helped Google make more advertising money than rivals and gave it more data to improve its ability to accurately field queries. It also gave Apple billions of reasons not to develop its own search engine.”

Brazilian researchers Joana Varon and Lucía Egaña Rojas reconsider AI discourse from a decolonial feminist perspective, proposing “compost engineers” as an alternative model rooted in soil ecology and fungal networks. Drawing on Donna Haraway’s speculative fiction, their manifesto advocates for regenerative technologies rather than Silicon Valley’s extractive approaches. “Instead of artificial, we propose natural, organic, multiple, chaotic,” they write of alternative frameworks that heal rather than dominate.

Daily discoveries at the nexus of art, science, technology, and culture: Get full access by becoming a HOLO Supporter!

- Perspective: research, long-form analysis, and critical commentary

- Encounters: in-depth artist profiles and studio visits of pioneers and key innovators

- Stream: a timeline and news archive with 3,100+ entries and counting

- Edition: HOLO’s annual collector’s edition that captures the calendar year in print