Stephen Wolfram Explains How ChatGPT Works (It Only Takes 20,000 Words)

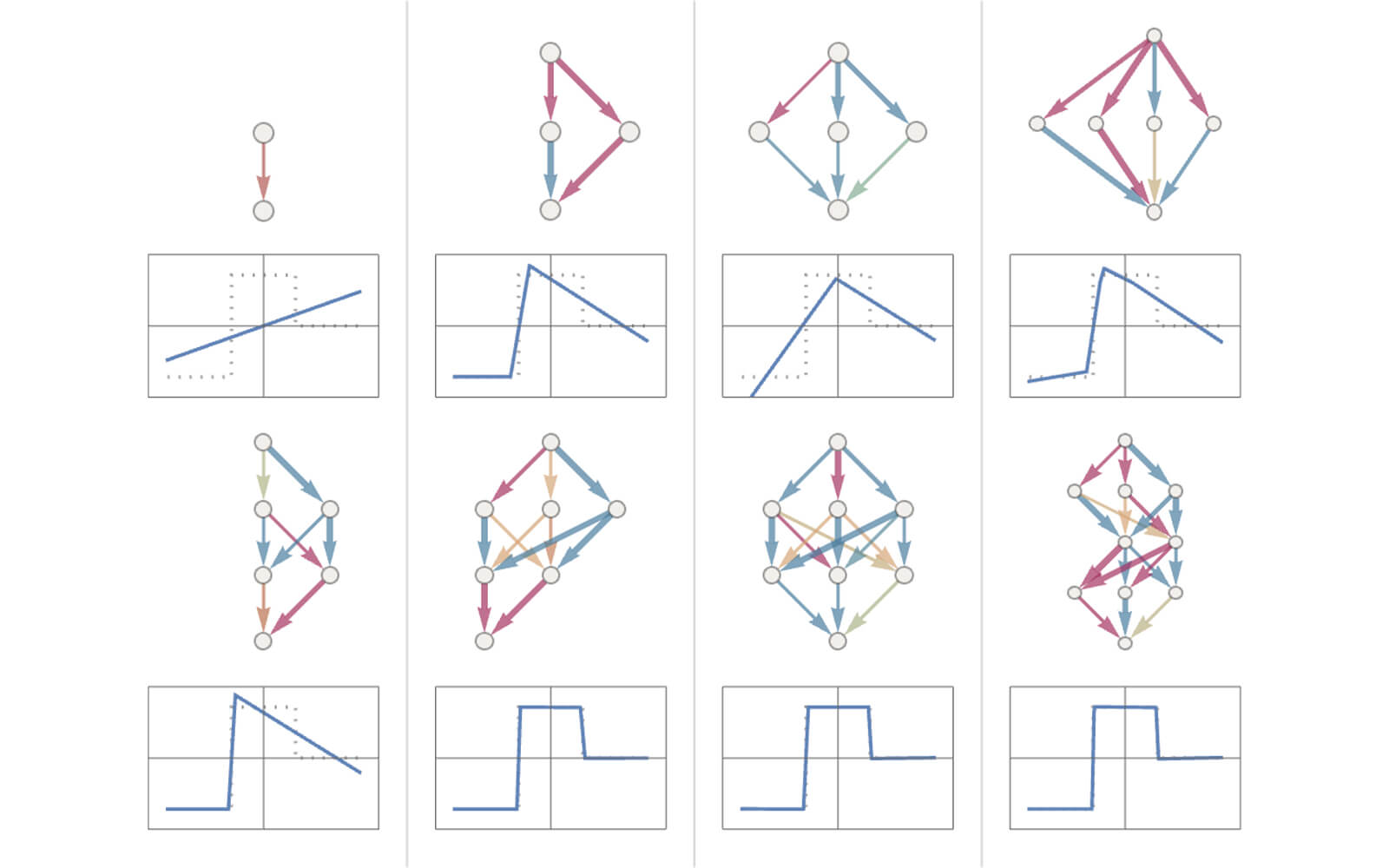

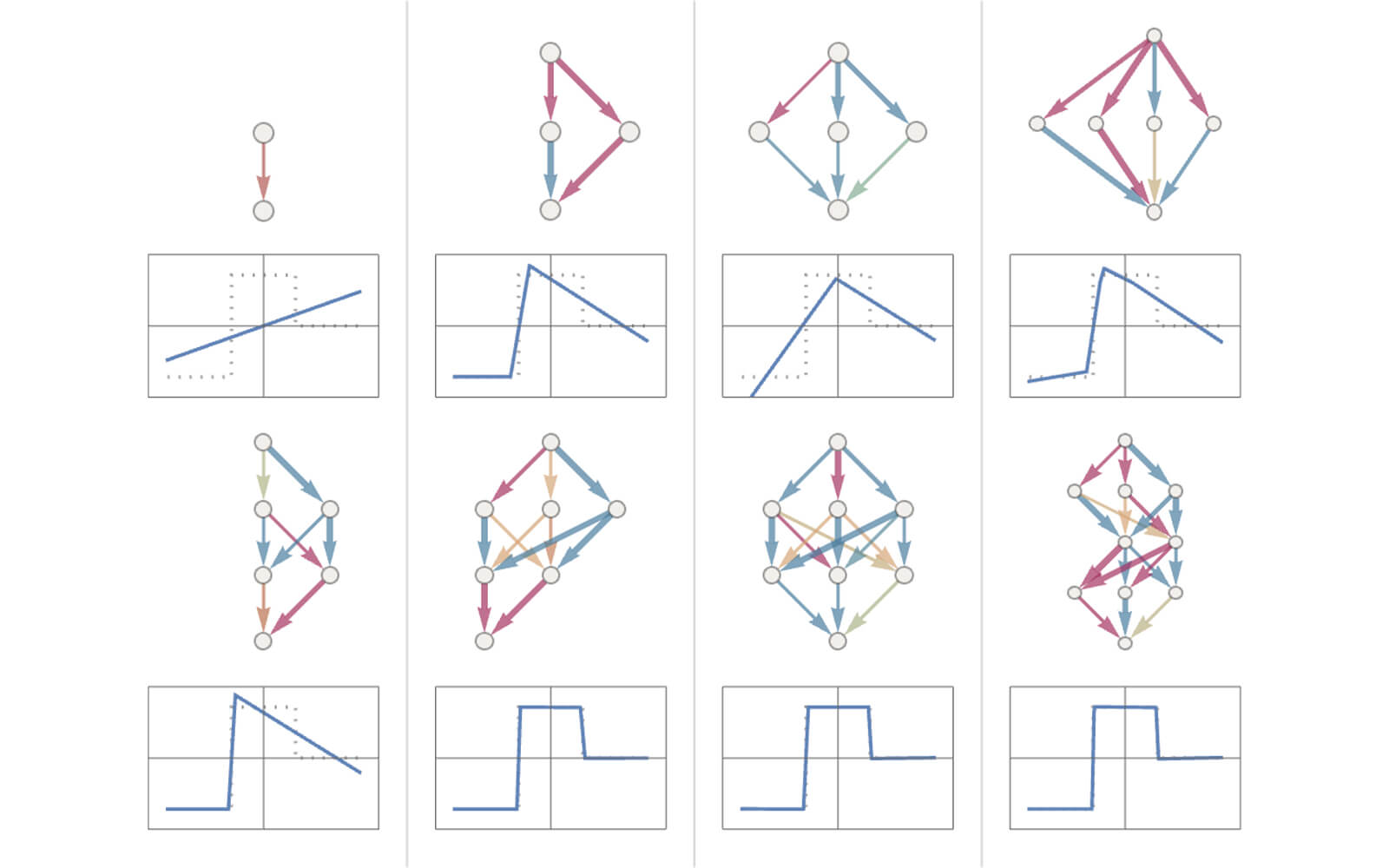

Full of playful examples—statistically modelling dropping cannonballs from different heights, a neural net theory of cat recognition—Stephen Wolfram breaks down how ChatGPT works. Working from the simple claim “it’s just adding one word at a time,” the computer scientist describes how neural nets are trained to model ‘human-like’ tasks in 3D space, how they tokenize language, and concludes with a rumination on semantic grammar that recognizes the language model’s successes (and limits).

Metadata:

/